Table of Contents

Introduction:

The increase in human-machine interactions in our dailylives has made user interface technology progressivelymore important. Physical gestures as intuitive expressions willgreatly ease the interaction process and enable humans to command computers or machines. For example, in telerobotics, slave robots have been demonstrated to follow themaster’s hand motions remotely. Other proposed applications of recognizing hand gestures include character recognition in 3-D space using inertial sensors, gesture recognition to control a television set remotely, enabling a hand as a 3-D mouse, and using hand gestures as a control mechanism in virtual reality. Moreover, gesture recognition hasalso been proposed to understand the actions of a musical conductor. In our work, a miniature MEMS accelerometer-based recognition system that can recognize seven hand gestures in 3-D space is built. The system has potential uses such as a remote controller for visual and audio equipment, or as a control mechanism to command machines and intelligent systems in offices and factories. Many kinds of existing devices can capture gestures, such as a “Wilmot,” joystick, trackball, and touch tablet. Some of them can also be employed to provide input to a gesture recognizer. But sometimes, the technology employed for capturing gestures can be relatively expensive, such as a vision system or a data glove. To strike a balance between the accuracy of collected data and the cost of devices, a Micro Inertial Measurement Unit is utilized in this project to detect the accelerations of hand motions in three dimensions. There are mainly two existing types of gesture recognition methods, i.e., vision-based and accelerometer and/or gyroscope based. Due to the limitations such as unexpected ambient optical noise, slower dynamic response, and relatively large data collection/processing of vision-based method [9], our recognition system is implemented based on an inertial measurement unit based on MEMS acceleration sensors. Since a heavy computation burden will be brought if gyroscopes are used for inertial measurement, our current system is based on MEMS accelerometers only and gyroscopes are not implemented for motion sensing. Existing gesture recognition approaches include template-matching, dictionary lookup, statistical matching, linguistic matching, and neural networks. For sequential data such as the measurement of time series and acoustic features at successive time frames used for speech recognition, HMM (Hidden Markov Model) is one of the most important models. It is effective for recognizing patterns with spatial and temporal variation. In this paper, we present three different gesture recognition models, which are: 1) sign sequence and Hopfield based gesture recognition model; velocity increment-based gesture recognition model; and 3) sign sequence and template matching based gesture recognition model. In these three models, to find a simple and efficient solution to the hand gesture recognition problem-based on MEMS accelerometers, the acceleration patterns are not mapped into velocity, displacement, or transformed into the frequency domain, but are directly segmented and recognized in the time domain. By extracting a simple feature based on the sign sequence of acceleration, the recognition system achieves high accuracy and efficiency without the employment of HMM.

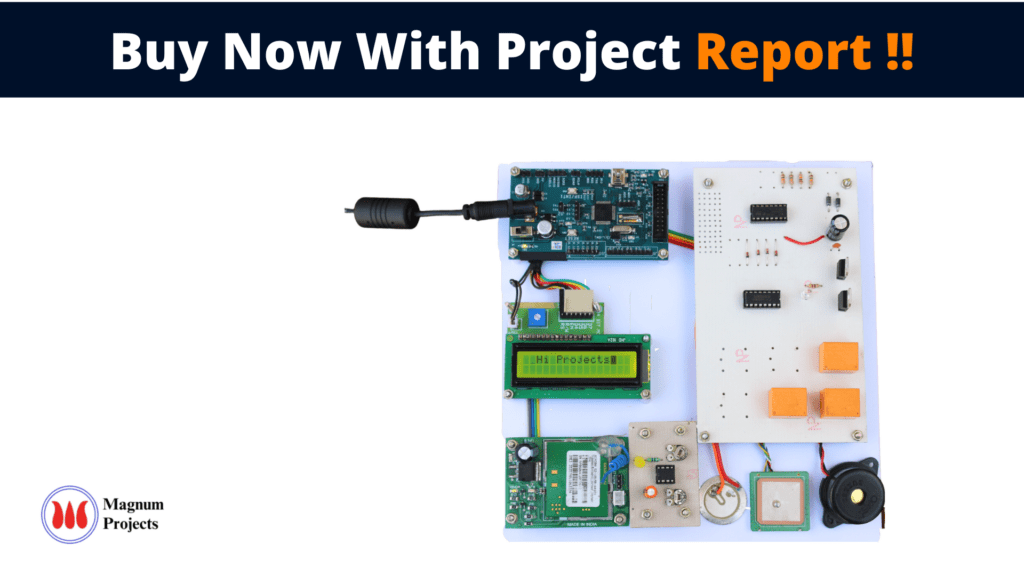

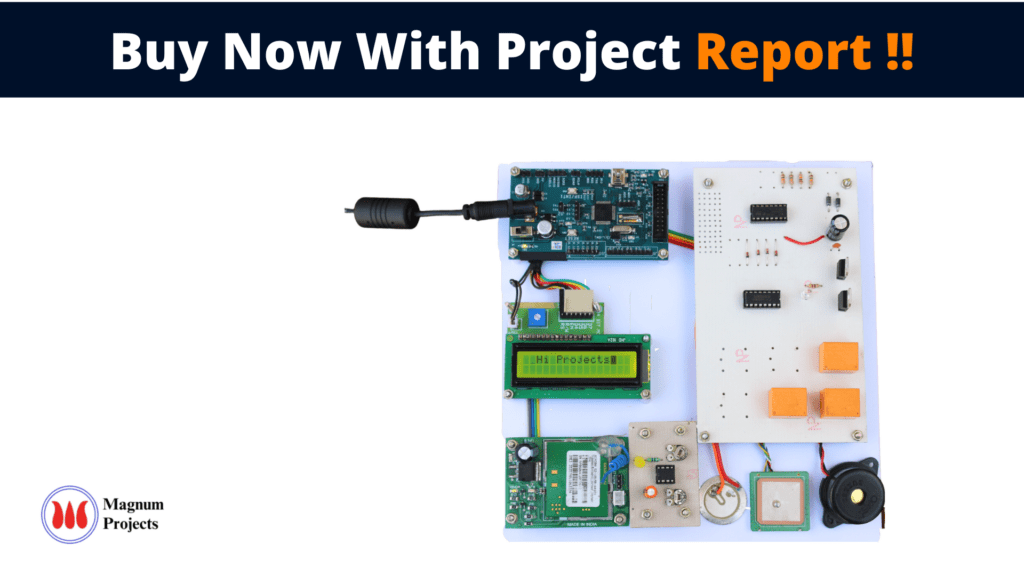

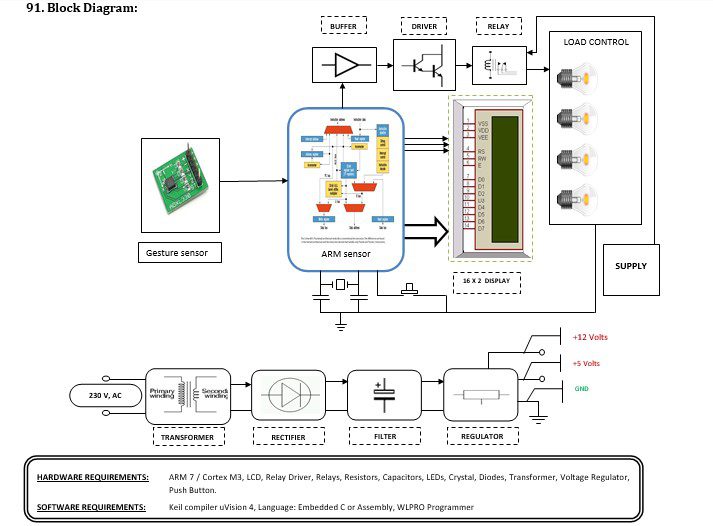

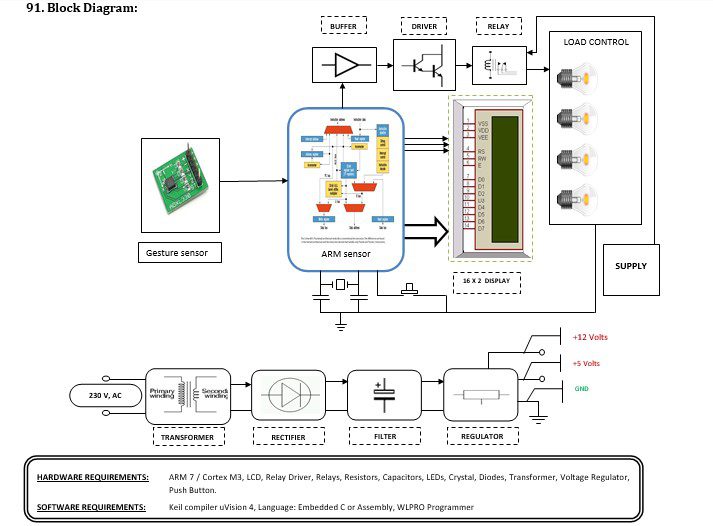

Block diagram explanation:

Power supply unit:

This section needs two voltages viz., +12 V & +5 V, as working voltages. Hence specially designed power supply is constructed to get regulated power supplies.

ARM processor:

ARM is a computer processor-based RISC architecture. A RISC-based computer design approach means ARM processors require significantly fewer transistors than typical processors in average computers. This approach reduces costs, heat, and power use. The low power consumption of ARM processors has made them very popular:

The ARM architecture (32-bit) is the most widely used in mobile devices, and the most popular 32-bit one in embedded systems.

ARM processor features include:

- Load/store architecture.

- An orthogonal instruction set.

- Mostly single-cycle execution.

- A 16×32-bit register

- Enhanced power-saving design.

Sensor Description

The sensing system utilized in our experiments for hand motion data collection is shown in Fig. 2 and is essentially a MEMS3-axes acceleration sensing chip integrated with data management and Bluetooth wireless data chips. The algorithms described in this paper were implemented and run on a PC. Details of the hardware architecture of this sensing system were published by our group in [19] and [20]. The sensing system has also been commercialized in a more compact form recently.

Buffers:

Buffers do not affect the logical state of a digital signal (i.e. a logic 1 input results in a logic 1 output whereas logic 0 input results in a logic 0 output). Buffers are normally used to provide extra current drive at the output but can also be used to regularize the logic present at an interface.

Drivers:

This section is used to drive the relay where the output is the complement of input which is applied to the drive but the current will be amplified.

Relays:

It is an electromagnetic device that is used to drive the load connected across the relay and the o/p of the relay can be connected to the controller or load for further processing.

Indicator:

This stage provides a visual indication of which relay is actuated and deactivated, by glowing respective LED or Buzzer.

Methodology:

In the proposed method of gesture-based device control the main controlling device is the Arm controller when a disabled person wants to enable and disable a device just he needs to make a moment of hands by that the sensor activates and sends signals to the Arm then the arm decides which device to be controlled.

Advantages:

- Speed and sufficient reliability for recognition system. Good performance system with complex background.

- The radial form division and boundary histogram for each extracted region, overcome the chain shifting problem, and variant rotation problem.

- The exact shape of the hand obtained led to good feature extraction. Fast and powerful results from the proposed algorithm.

- Simple and active, and successfully can recognize a word and alphabet. Automatic sampling, and augmented filtering data improved the system performance.

- The system successfully recognized static and dynamic gestures. Could be applied to a mobile robot control.

- Simple, fast, and easy to implement. Can be applied to real systems and play games.

- No Training is required.

Disadvantages:

- Irrelevant objects might overlap with the hand. Wrong object extraction appeared if the objects were larger than the hand. The performance recognition algorithm decreases when the distance is greater than 1.5 meters between the user and the camera.

- System limitations restrict the applications such as; the arm must be vertical, the palm is facing the camera and the finger color must be basic color such as either red or green or blue.

- Ambient light affects the color detection threshold.

Applications:

1.3D Design:

CAD (computer-aided design) is an HCI that provides a platform for the interpretation and

manipulation of 3-Dimensional inputs which can be gestures. Manipulating 3D inputs with a mouse is a

time-consuming task as the task involves a complicated process of decomposing a six-degree freedom task

into at least three sequential two-degree tasks

2. Tele-presence:

There may raise the need for manual operations in some cases such as system failure or emergency hostile conditions or inaccessible remote areas. Often human operators can’t be physically present near the machines

3. Virtual reality:

Virtual reality is applied to computer-simulated environments that can simulate

Physical presence in places in the real world, as well as in imaginary worlds. Most current virtual reality

Environments are primarily visual experiences, displayed either on a computer screen or through special

Stereoscopic displays.

- Sign Language:

Sign languages are the rawest and most natural form of language that could be dated back to as early as the advent of human civilization when the first theories of sign languages appeared in history. It started even before the emergence of spoken languages. Since then sign language has evolved and been adopted as an integral part of our day-to-day communication process.